The beating heart of El Capitan explained

The engineers behind the AMD Instinct MI300A APU have published their research on crafting the future of “exascale heterogeneous processing.” The MI300A is the processor at the heart of El Capitan, which is expected to become the world’s fastest supercomputer when it begins operation this year. It is projected to run at two exaFLOPS.

13 AMD scientists cooperated on the recent research paper establishing the ways and means to achieve exaFLOPS performance. The thread from X (formerly Twitter) above provides an excellent birds-eye view of the research process, posted by one of the paper’s authors. While the existence of the MI300A is undoubtedly not novel news, first becoming public knowledge in May 2023, the new paper presented yesterday at ISCA 2024 helps to shine a light into how the sausage is made — precisely AMD’s thinking that led them to prioritize APUs over dedicated GPUs for exascale computing.

The birth of the Instinct MI300A came when the United States Department of Energy selected AMD to participate in supercomputer research over a decade ago. The DoE looked ahead to supercomputers running at exaflop speeds, but with the end of Moore’s Law on the horizon, it knew more profound innovation would have to take place to reach them. While powerful, AMD felt that discrete graphics cards would introduce too many space constraints and power draw to be scalable and exascale. Hence, it began research on the “Exascale Heterogeneous Processor.” Based on crafting a powerful enterprise APU that could synchronize with multiple copies of itself, the EHP project was first manifested in Frontier, the world’s first supercomputer to hit one exaFLOPS.

While the Frontier supercomputer was a massive success as the fastest supercomputer on earth when it was first launched, AMD didn’t fully realize its EHP plans. Frontier was based on the bones of EHP research but used dedicated MI250X graphics accelerators rather than the all-in-one APU solution AMD hoped for. This sacrifice had to be made to ship Frontier on time, as AMD’s V-Cache stacking technology was promising but not yet ready for primetime. The third revision of EHP planned during Frontier required, among other then-impossible tasks, stacking HBM modules on top of every GPU chiplet. 3D V-Cache had to wait longer in the oven, meaning Frontier launched in an imperfect yet powerful state.

Eventually, 3D V-Cache became the revolutionary technology it is today, and EHP was ready for a final push across the finish line. The new APU was born based on the CPU architecture of the EPYC processor inside Frontier. With a unified Infinity Fabric memory bus, the MI300A could finally accomplish transfer times measured in TB/s between its graphics and processing cores.

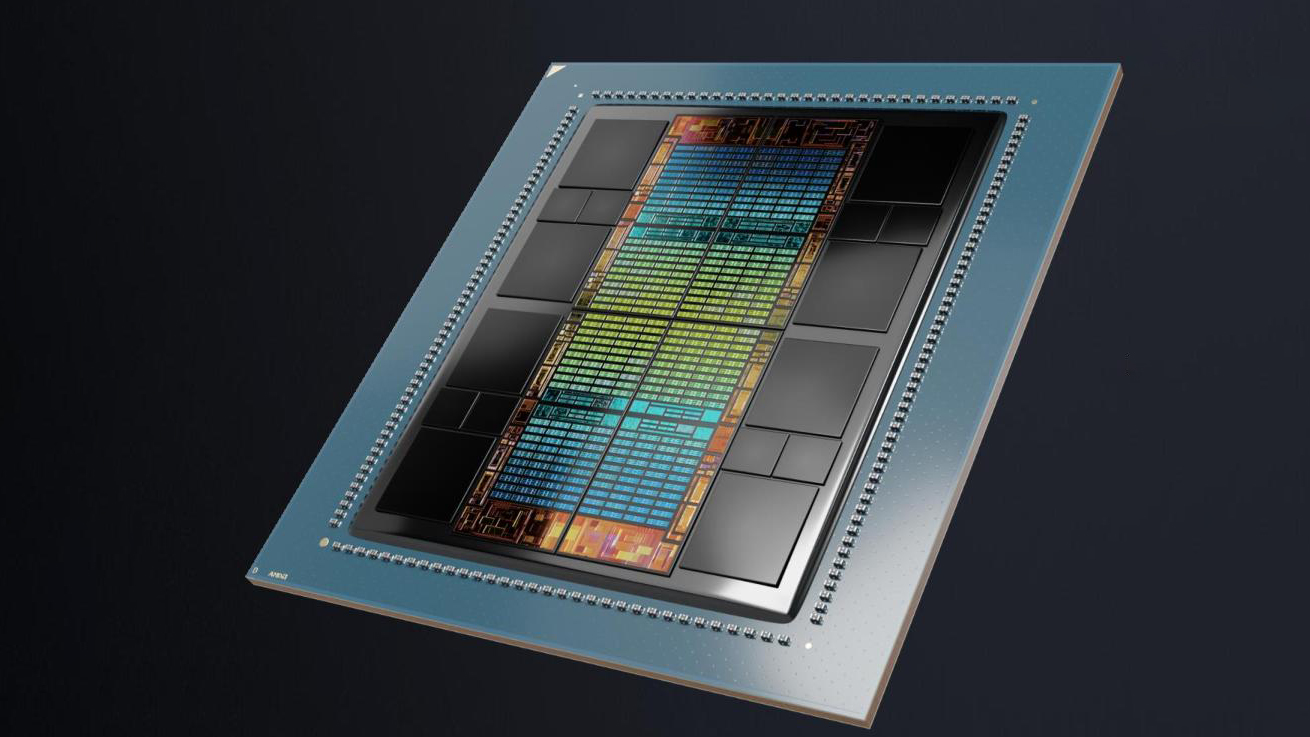

The MI300A, as the final form of the EHP Project, is no joke. The APU holds 24 Zen 4 x86 CPU cores in three chiplets alongside 228 CDNA 3 GPU compute units and 128 GB of unified HBM3 memory running at 5.2 GT/s, all woven into 4th-gen Infinity architecture. The numbers on its specs sheet seem typos, with a peak memory bandwidth of 5.3 TB/s and a theoretical peak AI performance of 3922 TFLOPS (insert three different disclaimers here).

The GPU performance on the MI300A APU increases substantially over the dedicated GPU performance of MI250X’s in Frontier. Tested against each other in a series of HPC-workload synthetic benchmarks, the MI300A outputs results 1.25x to 2.75x faster than the MI250X. The on-average doubling of performance certainly proves AMD and the Department of Energy were right to fight for EHP.

Of course, the MI300A isn’t meant to perform independently, as it is designed for use in an array of four APUs. Each APU has eight 128 GB/s Infinity Fabric interfaces, resulting in 1 TB/s of bidirectional connectivity. In a config of four APUs, the APUs can each communicate at rapid speeds while all also having a PCIe Gen5 x16 connection. Scale this up to a supercomputer, and El Capitan, the Department of Energy’s newest toy, is estimated to run at two exaFLOPS.